- Unset the http proxies environments for the `TestWebhookProxy`.

- Resolves#2132

(cherry picked from commit 244b9786fc)

(cherry picked from commit 8602dfa6a2)

(cherry picked from commit 8621449209)

(cherry picked from commit aefa77f917)

Refactor Hash interfaces and centralize hash function. This will allow

easier introduction of different hash function later on.

This forms the "no-op" part of the SHA256 enablement patch.

When `webhook.PROXY_URL` has been set, the old code will check if the

proxy host is in `ALLOWED_HOST_LIST` or reject requests through the

proxy. It requires users to add the proxy host to `ALLOWED_HOST_LIST`.

However, it actually allows all requests to any port on the host, when

the proxy host is probably an internal address.

But things may be even worse. `ALLOWED_HOST_LIST` doesn't really work

when requests are sent to the allowed proxy, and the proxy could forward

them to any hosts.

This PR fixes it by:

- If the proxy has been set, always allow connectioins to the host and

port.

- Check `ALLOWED_HOST_LIST` before forwarding.

This PR removed `unittest.MainTest` the second parameter

`TestOptions.GiteaRoot`. Now it detects the root directory by current

working directory.

---------

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Attemp fix: #25744

Fixing the log level when we delete any repo then we get error hook not

found by id. That should be warn level to reduce the noise in the logs.

---------

Co-authored-by: delvh <dev.lh@web.de>

Before there was a "graceful function": RunWithShutdownFns, it's mainly

for some modules which doesn't support context.

The old queue system doesn't work well with context, so the old queues

need it.

After the queue refactoring, the new queue works with context well, so,

use Golang context as much as possible, the `RunWithShutdownFns` could

be removed (replaced by RunWithCancel for context cancel mechanism), the

related code could be simplified.

This PR also fixes some legacy queue-init problems, eg:

* typo : archiver: "unable to create codes indexer queue" => "unable to

create repo-archive queue"

* no nil check for failed queues, which causes unfriendly panic

After this PR, many goroutines could have better display name:

This PR replaces all string refName as a type `git.RefName` to make the

code more maintainable.

Fix#15367

Replaces #23070

It also fixed a bug that tags are not sync because `git remote --prune

origin` will not remove local tags if remote removed.

We in fact should use `git fetch --prune --tags origin` but not `git

remote update origin` to do the sync.

Some answer from ChatGPT as ref.

> If the git fetch --prune --tags command is not working as expected,

there could be a few reasons why. Here are a few things to check:

>

>Make sure that you have the latest version of Git installed on your

system. You can check the version by running git --version in your

terminal. If you have an outdated version, try updating Git and see if

that resolves the issue.

>

>Check that your Git repository is properly configured to track the

remote repository's tags. You can check this by running git config

--get-all remote.origin.fetch and verifying that it includes

+refs/tags/*:refs/tags/*. If it does not, you can add it by running git

config --add remote.origin.fetch "+refs/tags/*:refs/tags/*".

>

>Verify that the tags you are trying to prune actually exist on the

remote repository. You can do this by running git ls-remote --tags

origin to list all the tags on the remote repository.

>

>Check if any local tags have been created that match the names of tags

on the remote repository. If so, these local tags may be preventing the

git fetch --prune --tags command from working properly. You can delete

local tags using the git tag -d command.

---------

Co-authored-by: delvh <dev.lh@web.de>

close https://github.com/go-gitea/gitea/issues/16321

Provided a webhook trigger for requesting someone to review the Pull

Request.

Some modifications have been made to the returned `PullRequestPayload`

based on the GitHub webhook settings, including:

- add a description of the current reviewer object as

`RequestedReviewer` .

- setting the action to either **review_requested** or

**review_request_removed** based on the operation.

- adding the `RequestedReviewers` field to the issues_model.PullRequest.

This field will be loaded into the PullRequest through

`LoadRequestedReviewers()` when `ToAPIPullRequest` is called.

After the Pull Request is merged, I will supplement the relevant

documentation.

# ⚠️ Breaking

Many deprecated queue config options are removed (actually, they should

have been removed in 1.18/1.19).

If you see the fatal message when starting Gitea: "Please update your

app.ini to remove deprecated config options", please follow the error

messages to remove these options from your app.ini.

Example:

```

2023/05/06 19:39:22 [E] Removed queue option: `[indexer].ISSUE_INDEXER_QUEUE_TYPE`. Use new options in `[queue.issue_indexer]`

2023/05/06 19:39:22 [E] Removed queue option: `[indexer].UPDATE_BUFFER_LEN`. Use new options in `[queue.issue_indexer]`

2023/05/06 19:39:22 [F] Please update your app.ini to remove deprecated config options

```

Many options in `[queue]` are are dropped, including:

`WRAP_IF_NECESSARY`, `MAX_ATTEMPTS`, `TIMEOUT`, `WORKERS`,

`BLOCK_TIMEOUT`, `BOOST_TIMEOUT`, `BOOST_WORKERS`, they can be removed

from app.ini.

# The problem

The old queue package has some legacy problems:

* complexity: I doubt few people could tell how it works.

* maintainability: Too many channels and mutex/cond are mixed together,

too many different structs/interfaces depends each other.

* stability: due to the complexity & maintainability, sometimes there

are strange bugs and difficult to debug, and some code doesn't have test

(indeed some code is difficult to test because a lot of things are mixed

together).

* general applicability: although it is called "queue", its behavior is

not a well-known queue.

* scalability: it doesn't seem easy to make it work with a cluster

without breaking its behaviors.

It came from some very old code to "avoid breaking", however, its

technical debt is too heavy now. It's a good time to introduce a better

"queue" package.

# The new queue package

It keeps using old config and concept as much as possible.

* It only contains two major kinds of concepts:

* The "base queue": channel, levelqueue, redis

* They have the same abstraction, the same interface, and they are

tested by the same testing code.

* The "WokerPoolQueue", it uses the "base queue" to provide "worker

pool" function, calls the "handler" to process the data in the base

queue.

* The new code doesn't do "PushBack"

* Think about a queue with many workers, the "PushBack" can't guarantee

the order for re-queued unhandled items, so in new code it just does

"normal push"

* The new code doesn't do "pause/resume"

* The "pause/resume" was designed to handle some handler's failure: eg:

document indexer (elasticsearch) is down

* If a queue is paused for long time, either the producers blocks or the

new items are dropped.

* The new code doesn't do such "pause/resume" trick, it's not a common

queue's behavior and it doesn't help much.

* If there are unhandled items, the "push" function just blocks for a

few seconds and then re-queue them and retry.

* The new code doesn't do "worker booster"

* Gitea's queue's handlers are light functions, the cost is only the

go-routine, so it doesn't make sense to "boost" them.

* The new code only use "max worker number" to limit the concurrent

workers.

* The new "Push" never blocks forever

* Instead of creating more and more blocking goroutines, return an error

is more friendly to the server and to the end user.

There are more details in code comments: eg: the "Flush" problem, the

strange "code.index" hanging problem, the "immediate" queue problem.

Almost ready for review.

TODO:

* [x] add some necessary comments during review

* [x] add some more tests if necessary

* [x] update documents and config options

* [x] test max worker / active worker

* [x] re-run the CI tasks to see whether any test is flaky

* [x] improve the `handleOldLengthConfiguration` to provide more

friendly messages

* [x] fine tune default config values (eg: length?)

## Code coverage:

`HookEventType` of pull request review comments should be

`HookEventPullRequestReviewComment` but some event types are

`HookEventPullRequestComment` now.

minio/sha256-simd provides additional acceleration for SHA256 using

AVX512, SHA Extensions for x86 and ARM64 for ARM.

It provides a drop-in replacement for crypto/sha256 and if the

extensions are not available it falls back to standard crypto/sha256.

---------

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: John Olheiser <john.olheiser@gmail.com>

Ensure that issue pullrequests are loaded before trying to set the

self-reference.

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: delvh <leon@kske.dev>

Co-authored-by: techknowlogick <techknowlogick@gitea.io>

Some bugs caused by less unit tests in fundamental packages. This PR

refactor `setting` package so that create a unit test will be easier

than before.

- All `LoadFromXXX` files has been splited as two functions, one is

`InitProviderFromXXX` and `LoadCommonSettings`. The first functions will

only include the code to create or new a ini file. The second function

will load common settings.

- It also renames all functions in setting from `newXXXService` to

`loadXXXSetting` or `loadXXXFrom` to make the function name less

confusing.

- Move `XORMLog` to `SQLLog` because it's a better name for that.

Maybe we should finally move these `loadXXXSetting` into the `XXXInit`

function? Any idea?

---------

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: delvh <dev.lh@web.de>

To avoid duplicated load of the same data in an HTTP request, we can set

a context cache to do that. i.e. Some pages may load a user from a

database with the same id in different areas on the same page. But the

code is hidden in two different deep logic. How should we share the

user? As a result of this PR, now if both entry functions accept

`context.Context` as the first parameter and we just need to refactor

`GetUserByID` to reuse the user from the context cache. Then it will not

be loaded twice on an HTTP request.

But of course, sometimes we would like to reload an object from the

database, that's why `RemoveContextData` is also exposed.

The core context cache is here. It defines a new context

```go

type cacheContext struct {

ctx context.Context

data map[any]map[any]any

lock sync.RWMutex

}

var cacheContextKey = struct{}{}

func WithCacheContext(ctx context.Context) context.Context {

return context.WithValue(ctx, cacheContextKey, &cacheContext{

ctx: ctx,

data: make(map[any]map[any]any),

})

}

```

Then you can use the below 4 methods to read/write/del the data within

the same context.

```go

func GetContextData(ctx context.Context, tp, key any) any

func SetContextData(ctx context.Context, tp, key, value any)

func RemoveContextData(ctx context.Context, tp, key any)

func GetWithContextCache[T any](ctx context.Context, cacheGroupKey string, cacheTargetID any, f func() (T, error)) (T, error)

```

Then let's take a look at how `system.GetString` implement it.

```go

func GetSetting(ctx context.Context, key string) (string, error) {

return cache.GetWithContextCache(ctx, contextCacheKey, key, func() (string, error) {

return cache.GetString(genSettingCacheKey(key), func() (string, error) {

res, err := GetSettingNoCache(ctx, key)

if err != nil {

return "", err

}

return res.SettingValue, nil

})

})

}

```

First, it will check if context data include the setting object with the

key. If not, it will query from the global cache which may be memory or

a Redis cache. If not, it will get the object from the database. In the

end, if the object gets from the global cache or database, it will be

set into the context cache.

An object stored in the context cache will only be destroyed after the

context disappeared.

The `commit_id` property name is the same as equivalent functionality in

GitHub. If the action was not caused by a commit, an empty string is

used.

This can for example be used to automatically add a Resolved label to an

issue fixed by a commit, or clear it when the issue is reopened.

Fixes#22391

This field is optional for Discord, however when it exists in the

payload it is now validated.

Omitting it entirely just makes Discord use the default for that

webhook, which is set on the Discord side.

Signed-off-by: jolheiser <john.olheiser@gmail.com>

Previously, there was an `import services/webhooks` inside

`modules/notification/webhook`.

This import was removed (after fighting against many import cycles).

Additionally, `modules/notification/webhook` was moved to

`modules/webhook`,

and a few structs/constants were extracted from `models/webhooks` to

`modules/webhook`.

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Change all license headers to comply with REUSE specification.

Fix#16132

Co-authored-by: flynnnnnnnnnn <flynnnnnnnnnn@github>

Co-authored-by: John Olheiser <john.olheiser@gmail.com>

When re-retrieving hook tasks from the DB double check if they have not

been delivered in the meantime. Further ensure that tasks are marked as

delivered when they are being delivered.

In addition:

* Improve the error reporting and make sure that the webhook task

population script runs in a separate goroutine.

* Only get hook task IDs out of the DB instead of the whole task when

repopulating the queue

* When repopulating the queue make the DB request paged

Ref #17940

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

This PR adds a context parameter to a bunch of methods. Some helper

`xxxCtx()` methods got replaced with the normal name now.

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

The `getPullRequestPayloadInfo` function is widely used in many webhook,

it works well when PR is open or edit. But when we comment in PR review

panel (not PR panel), the comment content is not set as

`attachmentText`.

This commit set comment content as `attachmentText` when PR review, so

webhook could obtain this information via this function.

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: techknowlogick <techknowlogick@gitea.io>

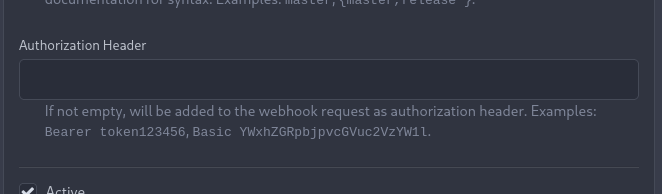

_This is a different approach to #20267, I took the liberty of adapting

some parts, see below_

## Context

In some cases, a weebhook endpoint requires some kind of authentication.

The usual way is by sending a static `Authorization` header, with a

given token. For instance:

- Matrix expects a `Bearer <token>` (already implemented, by storing the

header cleartext in the metadata - which is buggy on retry #19872)

- TeamCity #18667

- Gitea instances #20267

- SourceHut https://man.sr.ht/graphql.md#authentication-strategies (this

is my actual personal need :)

## Proposed solution

Add a dedicated encrypt column to the webhook table (instead of storing

it as meta as proposed in #20267), so that it gets available for all

present and future hook types (especially the custom ones #19307).

This would also solve the buggy matrix retry #19872.

As a first step, I would recommend focusing on the backend logic and

improve the frontend at a later stage. For now the UI is a simple

`Authorization` field (which could be later customized with `Bearer` and

`Basic` switches):

The header name is hard-coded, since I couldn't fine any usecase

justifying otherwise.

## Questions

- What do you think of this approach? @justusbunsi @Gusted @silverwind

- ~~How are the migrations generated? Do I have to manually create a new

file, or is there a command for that?~~

- ~~I started adding it to the API: should I complete it or should I

drop it? (I don't know how much the API is actually used)~~

## Done as well:

- add a migration for the existing matrix webhooks and remove the

`Authorization` logic there

_Closes #19872_

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: Gusted <williamzijl7@hotmail.com>

Co-authored-by: delvh <dev.lh@web.de>

At the moment a repository reference is needed for webhooks. With the

upcoming package PR we need to send webhooks without a repository

reference. For example a package is uploaded to an organization. In

theory this enables the usage of webhooks for future user actions.

This PR removes the repository id from `HookTask` and changes how the

hooks are processed (see `services/webhook/deliver.go`). In a follow up

PR I want to remove the usage of the `UniqueQueue´ and replace it with a

normal queue because there is no reason to be unique.

Co-authored-by: 6543 <6543@obermui.de>

Fixes#21379

The commits are capped by `setting.UI.FeedMaxCommitNum` so

`len(commits)` is not the correct number. So this PR adds a new

`TotalCommits` field.

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>