This PR adds a task to the cron service to allow garbage collection of

LFS meta objects. As repositories may have a large number of

LFSMetaObjects, an updated column is added to this table and it is used

to perform a generational GC to attempt to reduce the amount of work.

(There may need to be a bit more work here but this is probably enough

for the moment.)

Fix#7045

Signed-off-by: Andrew Thornton <art27@cantab.net>

Previously, there was an `import services/webhooks` inside

`modules/notification/webhook`.

This import was removed (after fighting against many import cycles).

Additionally, `modules/notification/webhook` was moved to

`modules/webhook`,

and a few structs/constants were extracted from `models/webhooks` to

`modules/webhook`.

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Some dbs require that all tables have primary keys, see

- #16802

- #21086

We can add a test to keep it from being broken again.

Edit:

~Added missing primary key for `ForeignReference`~ Dropped the

`ForeignReference` table to satisfy the check, so it closes#21086.

More context can be found in comments.

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: zeripath <art27@cantab.net>

Close#14601Fix#3690

Revive of #14601.

Updated to current code, cleanup and added more read/write checks.

Signed-off-by: Andrew Thornton <art27@cantab.net>

Signed-off-by: Andre Bruch <ab@andrebruch.com>

Co-authored-by: zeripath <art27@cantab.net>

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: Norwin <git@nroo.de>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

`hex.EncodeToString` has better performance than `fmt.Sprintf("%x",

[]byte)`, we should use it as much as possible.

I'm not an extreme fan of performance, so I think there are some

exceptions:

- `fmt.Sprintf("%x", func(...)[N]byte())`

- We can't slice the function return value directly, and it's not worth

adding lines.

```diff

func A()[20]byte { ... }

- a := fmt.Sprintf("%x", A())

- a := hex.EncodeToString(A()[:]) // invalid

+ tmp := A()

+ a := hex.EncodeToString(tmp[:])

```

- `fmt.Sprintf("%X", []byte)`

- `strings.ToUpper(hex.EncodeToString(bytes))` has even worse

performance.

Change all license headers to comply with REUSE specification.

Fix#16132

Co-authored-by: flynnnnnnnnnn <flynnnnnnnnnn@github>

Co-authored-by: John Olheiser <john.olheiser@gmail.com>

Unfortunately #21549 changed the name of Testcases without changing

their associated fixture directories.

Fix#21854

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

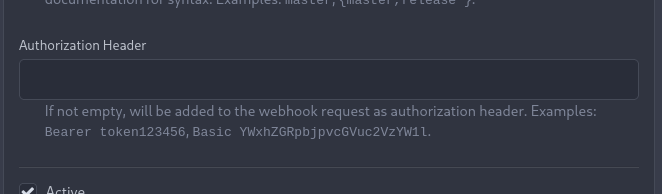

_This is a different approach to #20267, I took the liberty of adapting

some parts, see below_

## Context

In some cases, a weebhook endpoint requires some kind of authentication.

The usual way is by sending a static `Authorization` header, with a

given token. For instance:

- Matrix expects a `Bearer <token>` (already implemented, by storing the

header cleartext in the metadata - which is buggy on retry #19872)

- TeamCity #18667

- Gitea instances #20267

- SourceHut https://man.sr.ht/graphql.md#authentication-strategies (this

is my actual personal need :)

## Proposed solution

Add a dedicated encrypt column to the webhook table (instead of storing

it as meta as proposed in #20267), so that it gets available for all

present and future hook types (especially the custom ones #19307).

This would also solve the buggy matrix retry #19872.

As a first step, I would recommend focusing on the backend logic and

improve the frontend at a later stage. For now the UI is a simple

`Authorization` field (which could be later customized with `Bearer` and

`Basic` switches):

The header name is hard-coded, since I couldn't fine any usecase

justifying otherwise.

## Questions

- What do you think of this approach? @justusbunsi @Gusted @silverwind

- ~~How are the migrations generated? Do I have to manually create a new

file, or is there a command for that?~~

- ~~I started adding it to the API: should I complete it or should I

drop it? (I don't know how much the API is actually used)~~

## Done as well:

- add a migration for the existing matrix webhooks and remove the

`Authorization` logic there

_Closes #19872_

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: Gusted <williamzijl7@hotmail.com>

Co-authored-by: delvh <dev.lh@web.de>

The OAuth spec [defines two types of

client](https://datatracker.ietf.org/doc/html/rfc6749#section-2.1),

confidential and public. Previously Gitea assumed all clients to be

confidential.

> OAuth defines two client types, based on their ability to authenticate

securely with the authorization server (i.e., ability to

> maintain the confidentiality of their client credentials):

>

> confidential

> Clients capable of maintaining the confidentiality of their

credentials (e.g., client implemented on a secure server with

> restricted access to the client credentials), or capable of secure

client authentication using other means.

>

> **public

> Clients incapable of maintaining the confidentiality of their

credentials (e.g., clients executing on the device used by the resource

owner, such as an installed native application or a web browser-based

application), and incapable of secure client authentication via any

other means.**

>

> The client type designation is based on the authorization server's

definition of secure authentication and its acceptable exposure levels

of client credentials. The authorization server SHOULD NOT make

assumptions about the client type.

https://datatracker.ietf.org/doc/html/rfc8252#section-8.4

> Authorization servers MUST record the client type in the client

registration details in order to identify and process requests

accordingly.

Require PKCE for public clients:

https://datatracker.ietf.org/doc/html/rfc8252#section-8.1

> Authorization servers SHOULD reject authorization requests from native

apps that don't use PKCE by returning an error message

Fixes#21299

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

When actions besides "delete" are performed on issues, the milestone

counter is updated. However, since deleting issues goes through a

different code path, the associated milestone's count wasn't being

updated, resulting in inaccurate counts until another issue in the same

milestone had a non-delete action performed on it.

I verified this change fixes the inaccurate counts using a local docker

build.

Fixes#21254

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Fixes#21250

Related #20414

Conan packages don't have to follow SemVer.

The migration fixes the setting for all existing Conan and Generic

(#20414) packages.

In #21031 we have discovered that on very big tables postgres will use a

search involving the sort term in preference to the restrictive index.

Therefore we add another index for postgres and update the original migration.

Fix#21031

Signed-off-by: Andrew Thornton <art27@cantab.net>

Unfortunately some keys are too big to fix within the 65535 limit of TEXT on MySQL

this causes issues with these large keys.

Therefore increase these fields to MEDIUMTEXT.

Fix#20894

Signed-off-by: Andrew Thornton <art27@cantab.net>

* merge `CheckLFSVersion` into `InitFull` (renamed from `InitWithSyncOnce`)

* remove the `Once` during git init, no data-race now

* for doctor sub-commands, `InitFull` should only be called in initialization stage

Co-authored-by: zeripath <art27@cantab.net>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

WebAuthn have updated their specification to set the maximum size of the

CredentialID to 1023 bytes. This is somewhat larger than our current

size and therefore we need to migrate.

The PR changes the struct to add CredentialIDBytes and migrates the CredentialID string

to the bytes field before another migration drops the old CredentialID field. Another migration

renames this field back.

Fix#20457

Signed-off-by: Andrew Thornton <art27@cantab.net>

When viewing a subdirectory and the latest commit to that directory in

the table, the commit status icon incorrectly showed the status of the

HEAD commit instead of the latest for that directory.

Support synchronizing with the push mirrors whenever new commits are pushed or synced from pull mirror.

Related Issues: #18220

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: zeripath <art27@cantab.net>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Unforunately the previous PR #20035 created indices that were not helpful

for SQLite. This PR adjusts these after testing using the try.gitea.io db.

Fix#20129

Signed-off-by: Andrew Thornton <art27@cantab.net>

There appears to be a strange bug whereby the comment_id index can sometimes be missed

or missing from the action table despite the sync2 that should create it in the earlier

part of this migration. However, looking through the code for Sync2 there is no need

for this pre-code to exist and Sync2 should drop/create the indices as necessary.

I think therefore we should simplify the migration to simply be Sync2.

Signed-off-by: Andrew Thornton <art27@cantab.net>

* clean git support for ver < 2.0

* fine tune tests for markup (which requires git module)

* remove unnecessary comments

* try to fix tests

* try test again

* use const for GitVersionRequired instead of var

* try to fix integration test

* Refactor CheckAttributeReader to make a *git.Repository version

* update document for commit signing with Gitea's internal gitconfig

* update document for commit signing with Gitea's internal gitconfig

Co-authored-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

* Move access and repo permission to models/perm/access

* fix test

* fix git test

* Move functions sequence

* Some improvements per @KN4CK3R and @delvh

* Move issues related code to models/issues

* Move some issues related sub package

* Merge

* Fix test

* Fix test

* Fix test

* Fix test

* Rename some files

Upgrade builder to v0.3.11

Upgrade xorm to v1.3.1 and fixed some hidden bugs.

Replace #19821

Replace #19834

Included #19850

Co-authored-by: zeripath <art27@cantab.net>

* Fix indention

Signed-off-by: kolaente <k@knt.li>

* Add option to merge a pr right now without waiting for the checks to succeed

Signed-off-by: kolaente <k@knt.li>

* Fix lint

Signed-off-by: kolaente <k@knt.li>

* Add scheduled pr merge to tables used for testing

Signed-off-by: kolaente <k@knt.li>

* Add status param to make GetPullRequestByHeadBranch reusable

Signed-off-by: kolaente <k@knt.li>

* Move "Merge now" to a seperate button to make the ui clearer

Signed-off-by: kolaente <k@knt.li>

* Update models/scheduled_pull_request_merge.go

Co-authored-by: 赵智超 <1012112796@qq.com>

* Update web_src/js/index.js

Co-authored-by: 赵智超 <1012112796@qq.com>

* Update web_src/js/index.js

Co-authored-by: 赵智超 <1012112796@qq.com>

* Re-add migration after merge

* Fix frontend lint

* Fix version compare

* Add vendored dependencies

* Add basic tets

* Make sure the api route is capable of scheduling PRs for merging

* Fix comparing version

* make vendor

* adopt refactor

* apply suggestion: User -> Doer

* init var once

* Fix Test

* Update templates/repo/issue/view_content/comments.tmpl

* adopt

* nits

* next

* code format

* lint

* use same name schema; rm CreateUnScheduledPRToAutoMergeComment

* API: can not create schedule twice

* Add TestGetBranchNamesForSha

* nits

* new go routine for each pull to merge

* Update models/pull.go

Co-authored-by: a1012112796 <1012112796@qq.com>

* Update models/scheduled_pull_request_merge.go

Co-authored-by: a1012112796 <1012112796@qq.com>

* fix & add renaming sugestions

* Update services/automerge/pull_auto_merge.go

Co-authored-by: a1012112796 <1012112796@qq.com>

* fix conflict relicts

* apply latest refactors

* fix: migration after merge

* Update models/error.go

Co-authored-by: delvh <dev.lh@web.de>

* Update options/locale/locale_en-US.ini

Co-authored-by: delvh <dev.lh@web.de>

* Update options/locale/locale_en-US.ini

Co-authored-by: delvh <dev.lh@web.de>

* adapt latest refactors

* fix test

* use more context

* skip potential edgecases

* document func usage

* GetBranchNamesForSha() -> GetRefsBySha()

* start refactoring

* ajust to new changes

* nit

* docu nit

* the great check move

* move checks for branchprotection into own package

* resolve todo now ...

* move & rename

* unexport if posible

* fix

* check if merge is allowed before merge on scheduled pull

* debugg

* wording

* improve SetDefaults & nits

* NotAllowedToMerge -> DisallowedToMerge

* fix test

* merge files

* use package "errors"

* merge files

* add string names

* other implementation for gogit

* adapt refactor

* more context for models/pull.go

* GetUserRepoPermission use context

* more ctx

* use context for loading pull head/base-repo

* more ctx

* more ctx

* models.LoadIssueCtx()

* models.LoadIssueCtx()

* Handle pull_service.Merge in one DB transaction

* add TODOs

* next

* next

* next

* more ctx

* more ctx

* Start refactoring structure of old pull code ...

* move code into new packages

* shorter names ... and finish **restructure**

* Update models/branches.go

Co-authored-by: zeripath <art27@cantab.net>

* finish UpdateProtectBranch

* more and fix

* update datum

* template: use "svg" helper

* rename prQueue 2 prPatchCheckerQueue

* handle automerge in queue

* lock pull on git&db actions ...

* lock pull on git&db actions ...

* add TODO notes

* the regex

* transaction in tests

* GetRepositoryByIDCtx

* shorter table name and lint fix

* close transaction bevore notify

* Update models/pull.go

* next

* CheckPullMergable check all branch protections!

* Update routers/web/repo/pull.go

* CheckPullMergable check all branch protections!

* Revert "PullService lock via pullID (#19520)" (for now...)

This reverts commit 6cde7c9159a5ea75a10356feb7b8c7ad4c434a9a.

* Update services/pull/check.go

* Use for a repo action one database transaction

* Apply suggestions from code review

* Apply suggestions from code review

Co-authored-by: delvh <dev.lh@web.de>

* Update services/issue/status.go

Co-authored-by: delvh <dev.lh@web.de>

* Update services/issue/status.go

Co-authored-by: delvh <dev.lh@web.de>

* use db.WithTx()

* gofmt

* make pr.GetDefaultMergeMessage() context aware

* make MergePullRequestForm.SetDefaults context aware

* use db.WithTx()

* pull.SetMerged only with context

* fix deadlock in `test-sqlite\#TestAPIBranchProtection`

* dont forget templates

* db.WithTx allow to set the parentCtx

* handle db transaction in service packages but not router

* issue_service.ChangeStatus just had caused another deadlock :/

it has to do something with how notification package is handled

* if we merge a pull in one database transaktion, we get a lock, because merge infoce internal api that cant handle open db sessions to the same repo

* ajust to current master

* Apply suggestions from code review

Co-authored-by: delvh <dev.lh@web.de>

* dont open db transaction in router

* make generate-swagger

* one _success less

* wording nit

* rm

* adapt

* remove not needed test files

* rm less diff & use attr in JS

* ...

* Update services/repository/files/commit.go

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

* ajust db schema for PullAutoMerge

* skip broken pull refs

* more context in error messages

* remove webUI part for another pull

* remove more WebUI only parts

* API: add CancleAutoMergePR

* Apply suggestions from code review

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

* fix lint

* Apply suggestions from code review

* cancle -> cancel

Co-authored-by: delvh <dev.lh@web.de>

* change queue identifyer

* fix swagger

* prevent nil issue

* fix and dont drop error

* as per @zeripath

* Update integrations/git_test.go

Co-authored-by: delvh <dev.lh@web.de>

* Update integrations/git_test.go

Co-authored-by: delvh <dev.lh@web.de>

* more declarative integration tests (dedup code)

* use assert.False/True helper

Co-authored-by: 赵智超 <1012112796@qq.com>

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: zeripath <art27@cantab.net>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Adds a feature [like GitHub has](https://docs.github.com/en/pull-requests/collaborating-with-pull-requests/proposing-changes-to-your-work-with-pull-requests/creating-a-pull-request-from-a-fork) (step 7).

If you create a new PR from a forked repo, you can select (and change later, but only if you are the PR creator/poster) the "Allow edits from maintainers" option.

Then users with write access to the base branch get more permissions on this branch:

* use the update pull request button

* push directly from the command line (`git push`)

* edit/delete/upload files via web UI

* use related API endpoints

You can't merge PRs to this branch with this enabled, you'll need "full" code write permissions.

This feature has a pretty big impact on the permission system. I might forgot changing some things or didn't find security vulnerabilities. In this case, please leave a review or comment on this PR.

Closes#17728

Co-authored-by: 6543 <6543@obermui.de>

Follows: #19284

* The `CopyDir` is only used inside test code

* Rewrite `ToSnakeCase` with more test cases

* The `RedisCacher` only put strings into cache, here we use internal `toStr` to replace the legacy `ToStr`

* The `UniqueQueue` can use string as ID directly, no need to call `ToStr`

The main purpose is to refactor the legacy `unknwon/com` package.

1. Remove most imports of `unknwon/com`, only `util/legacy.go` imports the legacy `unknwon/com`

2. Use golangci's depguard to process denied packages

3. Fix some incorrect values in golangci.yml, eg, the version should be quoted string `"1.18"`

4. Use correctly escaped content for `go-import` and `go-source` meta tags

5. Refactor `com.Expand` to our stable (and the same fast) `vars.Expand`, our `vars.Expand` can still return partially rendered content even if the template is not good (eg: key mistach).

Follows #19266, #8553, Close#18553, now there are only three `Run..(&RunOpts{})` functions.

* before: `stdout, err := RunInDir(path)`

* now: `stdout, _, err := RunStdString(&git.RunOpts{Dir:path})`

Storing the foreign identifier of an imported issue in the database is a prerequisite to implement idempotent migrations or mirror for issues. It is a baby step towards mirroring that introduces a new table.

At the moment when an issue is created by the Gitea uploader, it fails if the issue already exists. The Gitea uploader could be modified so that, instead of failing, it looks up the database to find an existing issue. And if it does it would update the issue instead of creating a new one. However this is not currently possible because an information is missing from the database: the foreign identifier that uniquely represents the issue being migrated is not persisted. With this change, the foreign identifier is stored in the database and the Gitea uploader will then be able to run a query to figure out if a given issue being imported already exists.

The implementation of mirroring for issues, pull requests, releases, etc. can be done in three steps:

1. Store an identifier for the element being mirrored (issue, pull request...) in the database (this is the purpose of these changes)

2. Modify the Gitea uploader to be able to update an existing repository with all it contains (issues, pull request...) instead of failing if it exists

3. Optimize the Gitea uploader to speed up the updates, when possible.

The second step creates code that does not yet exist to enable idempotent migrations with the Gitea uploader. When a migration is done for the first time, the behavior is not changed. But when a migration is done for a repository that already exists, this new code is used to update it.

The third step can use the code created in the second step to optimize and speed up migrations. For instance, when a migration is resumed, an issue that has an update time that is not more recent can be skipped and only newly created issues or updated ones will be updated. Another example of optimization could be that a webhook notifies Gitea when an issue is updated. The code triggered by the webhook would download only this issue and call the code created in the second step to update the issue, as if it was in the process of an idempotent migration.

The ForeignReferences table is added to contain local and foreign ID pairs relative to a given repository. It can later be used for pull requests and other artifacts that can be mirrored. Although the foreign id could be added as a single field in issues or pull requests, it would need to be added to all tables that represent something that can be mirrored. Creating a new table makes for a simpler and more generic design. The drawback is that it requires an extra lookup to obtain the information. However, this extra information is only required during migration or mirroring and does not impact the way Gitea currently works.

The foreign identifier of an issue or pull request is similar to the identifier of an external user, which is stored in reactions, issues, etc. as OriginalPosterID and so on. The representation of a user is however different and the ability of users to link their account to an external user at a later time is also a logic that is different from what is involved in mirroring or migrations. For these reasons, despite some commonalities, it is unclear at this time how the two tables (foreign reference and external user) could be merged together.

The ForeignID field is extracted from the issue migration context so that it can be dumped in files with dump-repo and later restored via restore-repo.

The GetAllComments downloader method is introduced to simplify the implementation and not overload the Context for the purpose of pagination. It also clarifies in which context the comments are paginated and in which context they are not.

The Context interface is no longer useful for the purpose of retrieving the LocalID and ForeignID since they are now both available from the PullRequest and Issue struct. The Reviewable and Commentable interfaces replace and serve the same purpose.

The Context data member of PullRequest and Issue becomes a DownloaderContext to clarify that its purpose is not to support in memory operations while the current downloader is acting but is not otherwise persisted. It is, for instance, used by the GitLab downloader to store the IsMergeRequest boolean and sort out issues.

---

[source](https://lab.forgefriends.org/forgefriends/forgefriends/-/merge_requests/36)

Signed-off-by: Loïc Dachary <loic@dachary.org>

Co-authored-by: Loïc Dachary <loic@dachary.org>

* Update the webauthn_credential_id_sequence in Postgres

There is (yet) another problem with v210 in that Postgres will silently allow preset

ID insertions ... but it will not update the sequence value.

This PR simply adds a little step to the end of the v210 migration to update the

sequence number.

Users who have already migrated who find that they cannot insert new

webauthn_credentials into the DB can either run:

```bash

gitea doctor recreate-table webauthn_credential

```

or

```bash

./gitea doctor --run=check-db-consistency --fix

```

which will fix the bad sequence.

Fix#19012

Signed-off-by: Andrew Thornton <art27@cantab.net>

* Fix page and missing return on unadopted repos API

Page must be 1 if it's not specified and it should return after sending an internal server error.

* Allow ignore pages

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

* logs: add the buffer logger to inspect logs during testing

Signed-off-by: Loïc Dachary <loic@dachary.org>

* migrations: add test for importing pull requests in gitea uploader

Signed-off-by: Loïc Dachary <loic@dachary.org>

* for each git.OpenRepositoryCtx, call Close

* Content is expected to return the content of the log

* test for errors before defer

Co-authored-by: Loïc Dachary <loic@dachary.org>

Co-authored-by: zeripath <art27@cantab.net>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

We can't depend on `latest` version of gofumpt because the output will

not be stable across versions. Lock it down to the latest version

released yesterday and run it again.

v208.go is seriously broken as it misses an ID() check. We need to no-op and remigrate all of the u2f keys.

See #18756

Signed-off-by: Andrew Thornton <art27@cantab.net>

Unfortunately credentialIDs in u2f are 255 bytes long which with base32 encoding

becomes 408 bytes. The default size of a xorm string field is only a VARCHAR(255)

This problem is not apparent on SQLite because strings get mapped to TEXT there.

Fix#18727

Signed-off-by: Andrew Thornton <art27@cantab.net>

This PR continues the work in #17125 by progressively ensuring that git

commands run within the request context.

This now means that the if there is a git repo already open in the context it will be used instead of reopening it.

Signed-off-by: Andrew Thornton <art27@cantab.net>

This contains some additional fixes and small nits related to #17957

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Migrate from U2F to Webauthn

Co-authored-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

- Don't use `ioutil` package anymore as it doesn't anything special

anymore since Go 1.16:

```

// As of Go 1.16, the same functionality is now provided

// by package io or package os, and those implementations

// should be preferred in new code.

```

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

* Team permission allow different unit has different permission

* Finish the interface and the logic

* Fix lint

* Fix translation

* align center for table cell content

* Fix fixture

* merge

* Fix test

* Add deprecated

* Improve code

* Add tooltip

* Fix swagger

* Fix newline

* Fix tests

* Fix tests

* Fix test

* Fix test

* Max permission of external wiki and issues should be read

* Move team units with limited max level below units table

* Update label and column names

* Some improvements

* Fix lint

* Some improvements

* Fix template variables

* Add permission docs

* improve doc

* Fix fixture

* Fix bug

* Fix some bug

* fix

* gofumpt

* Integration test for migration (#18124)

integrations: basic test for Gitea {dump,restore}-repo

This is a first step for integration testing of DumpRepository and

RestoreRepository. It:

runs a Gitea server,

dumps a repo via DumpRepository to the filesystem,

restores the repo via RestoreRepository from the filesystem,

dumps the restored repository to the filesystem,

compares the first and second dump and expects them to be identical

The verification is trivial and the goal is to add more tests for each

topic of the dump.

Signed-off-by: Loïc Dachary <loic@dachary.org>

* Team permission allow different unit has different permission

* Finish the interface and the logic

* Fix lint

* Fix translation

* align center for table cell content

* Fix fixture

* merge

* Fix test

* Add deprecated

* Improve code

* Add tooltip

* Fix swagger

* Fix newline

* Fix tests

* Fix tests

* Fix test

* Fix test

* Max permission of external wiki and issues should be read

* Move team units with limited max level below units table

* Update label and column names

* Some improvements

* Fix lint

* Some improvements

* Fix template variables

* Add permission docs

* improve doc

* Fix fixture

* Fix bug

* Fix some bug

* Fix bug

Co-authored-by: Lauris BH <lauris@nix.lv>

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: Aravinth Manivannan <realaravinth@batsense.net>

- The current implementation of `RandomString` doesn't give you a most-possible unique randomness. It gives you 6*`length` instead of the possible 8*`length` bits(or as `length`x bytes) randomness. This is because `RandomString` is being limited to a max value of 63, this in order to represent the random byte as a letter/digit.

- The recommendation of pbkdf2 is to use 64+ bit salt, which the `RandomString` doesn't give with a length of 10, instead of increasing 10 to a higher number, this patch adds a new function called `RandomBytes` which does give you the guarentee of 8*`length` randomness and thus corresponding of `length`x bytes randomness.

- Use hexadecimal to store the bytes value in the database, as mentioned, it doesn't play nice in order to convert it to a string. This will always be a length of 32(with `length` being 16).

- When we detect on `Authenticate`(source: db) that a user has the old format of salt, re-hash the password such that the user will have it's password hashed with increased salt.

Thanks to @zeripath for working out the rouge edges from my first commit 😄.

Co-authored-by: lafriks <lauris@nix.lv>

Co-authored-by: zeripath <art27@cantab.net>

* Add support for ssh commit signing

* Split out ssh verification to separate file

* Show ssh key fingerprint on commit page

* Update sshsig lib

* Make sure we verify against correct namespace

* Add ssh public key verification via ssh signatures

When adding a public ssh key also validate that this user actually

owns the key by signing a token with the private key.

* Remove some gpg references and make verify key optional

* Fix spaces indentation

* Update options/locale/locale_en-US.ini

Co-authored-by: Gusted <williamzijl7@hotmail.com>

* Update templates/user/settings/keys_ssh.tmpl

Co-authored-by: Gusted <williamzijl7@hotmail.com>

* Update options/locale/locale_en-US.ini

Co-authored-by: Gusted <williamzijl7@hotmail.com>

* Update options/locale/locale_en-US.ini

Co-authored-by: Gusted <williamzijl7@hotmail.com>

* Update models/ssh_key_commit_verification.go

Co-authored-by: Gusted <williamzijl7@hotmail.com>

* Reword ssh/gpg_key_success message

* Change Badsignature to NoKeyFound

* Add sign/verify tests

* Fix upstream api changes to user_model User

* Match exact on SSH signature

* Fix code review remarks

Co-authored-by: Gusted <williamzijl7@hotmail.com>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: techknowlogick <techknowlogick@gitea.io>

This PR contains multiple fixes. The most important of which is:

* Prevent hang in git cat-file if the repository is not a valid repository

Unfortunately it appears that if git cat-file is run in an invalid

repository it will hang until stdin is closed. This will result in

deadlocked /pulls pages and dangling git cat-file calls if a broken

repository is tried to be reviewed or pulls exists for a broken

repository.

Fix#14734Fix#9271Fix#16113

Otherwise there are a few small other fixes included which this PR was initially intending to fix:

* Fix panic on partial compares due to missing PullRequestWorkInProgressPrefixes

* Fix links on pulls pages due to regression from #17551 - by making most /issues routes match /pulls too - Fix#17983

* Fix links on feeds pages due to another regression from #17551 but also fix issue with syncing tags - Fix#17943

* Add missing locale entries for oauth group claims

* Prevent NPEs if ColorFormat is called on nil users, repos or teams.

Running `make test-backend` will delete `data/` due to reloading the configuration and resetting the appdatapath.

This PR removes this unnecessary config reload but also adds extra code in to the unittest main to prevent its cleanup from deleting the wrong directory.

Signed-off-by: Andrew Thornton <art27@cantab.net>

* Check if column exist before rename if exist, just return with no error

* Also check if errors column exist

* Add comment for migration

* Fix sqlite test

* Improve install code to avoid low-level mistakes.

If a user tries to do a re-install in a Gitea database, they gets a warning and double check.

When Gitea runs, it never create empty app.ini automatically.

Also some small (related) refactoring:

* Refactor db.InitEngine related logic make it more clean (especially for the install code)

* Move some i18n strings out from setting.go to make the setting.go can be easily maintained.

* Show errors in CLI code if an incorrect app.ini is used.

* APP_DATA_PATH is created when installing, and checked when starting (no empty directory is created any more).

We have the `AppState` module now, it can store app related data easily. We do not need to create separate tables for each feature.

So the update checker can use `AppState` instead of a one-row dedicate table.

And the code of update checker is moved from `models` to `modules`.

Gitea writes its own AppPath into git hook scripts. If Gitea's AppPath changes, then the git push will fail.

This PR:

* Introduce an AppState module, it can persist app states into database

* During GlobalInit, Gitea will check if the current AppPath is the same as last one. If they don't match, Gitea will sync git hooks.

* Refactor some code to make them more clear.

* Also, "Detect if gitea binary's name changed" #11341 is related, we call models.RewriteAllPublicKeys to update ssh authorized_keys file

* issue content history

* Use timeutil.TimeStampNow() for content history time instead of issue/comment.UpdatedUnix (which are not updated in time)

* i18n for frontend

* refactor

* clean up

* fix refactor

* re-format

* temp refactor

* follow db refactor

* rename IssueContentHistory to ContentHistory, remove empty model tags

* fix html

* use avatar refactor to generate avatar url

* add unit test, keep at most 20 history revisions.

* re-format

* syntax nit

* Add issue content history table

* Update models/migrations/v197.go

Co-authored-by: 6543 <6543@obermui.de>

* fix merge

Co-authored-by: zeripath <art27@cantab.net>

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: Lauris BH <lauris@nix.lv>

- Update default branch if needed

- Update protected branch if needed

- Update all not merged pull request base branch name

- Rename git branch

- Record this rename work and auto redirect for old branch on ui

Signed-off-by: a1012112796 <1012112796@qq.com>

Co-authored-by: delvh <dev.lh@web.de>